Businesses everywhere are rapidly adopting Artificial Intelligence technologies. Companies like OpenAI, Google, and Meta are leading the charge on their development, but how much of the AI revolution is just marketing noise and what's practical for businesses? On the surface, the likes of ChatGPT appear to work like the average chatbot, which has been around since the mid 1960s, but if you look into how AI technology has evolved it's easy to understand why now is a great time to invest in AI enhancements, especially for ITSMIT Service Management teams.

AI Tech vs. The Typical Chatbot

ChatGPT is the most commonly known AI deployment on the market today; the result of a few different technologies working together that have emerged in the past few years. Contrary to typical chatbot deployments that are limited to predetermined tasks, queries, and responses, ChatGPT's technology deploys a large-language model (LLM) that comprehends the context of phrases it's given and generates truly meaningful responses from it. It answers questions about just about anything you can imagine that's freely available, having been trained on more than 175 billion parameters (AKA words and partial words) to give it the ability to understand and respond to human input.

For businesses looking to take advantage of these emerging technologies, embedding AI within your tools can be extremely helpful. Atlassian is doing just that with Atlassian Intelligence. More of a feature-set than a product of its own, Atlassian Intelligence embeds AI technology subtly into Atlassian Cloud Premium and Enterprise products (on-prem folks will be missing out). For users, Atlassian Intelligence will allow things like plain-language search in Jira (no more frustration with JQLJira Query Language: a syntax for searching through Jira projects, issues, and people. syntax!), summarizing the contents of a Confluence page, evaluating code commits in Bitbucket, and more. Just ask your AI-powered "virtual teammate" to do so in a text-field.

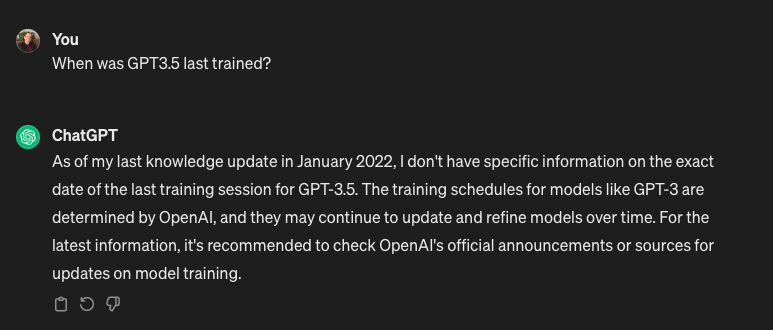

But how is Atlassian Intelligence actually answering questions about your business? A crucial component to an LLMLarge Language Model: The interpretive component that allows AI products to comprehend and create responses in human language's ability to answer on 'anything' lies in the data that it was trained on. According to ChatGPT itself, its last knowledge update was in January, 2022. This means ChatGPT can't reliably answer on current events or any data it hasn’t trained on. This naturally includes any data that's private to an organization.

Generally speaking, this means ChatGPT can't talk reliably about anything specific to your business. Atlassian Intelligence overcomes this limitation by providing its LLMLarge Language Model: The interpretive component that allows AI products to comprehend and create responses in human language with data from your cloud instance, so it can leverage the full context of information residing in Jira projects, Confluence articles, Bitbucket repos, and other Atlassian data to answer questions correctly (with robust security considerations, of course).

Enterprise AI for ITSM with a slice of PIE

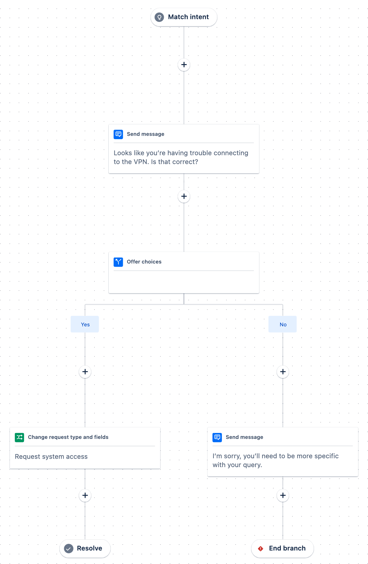

In terms of the ITSMIT Service Management space, Atlassian Intelligence leverages a Slack integration to give internal users a way to query your instance and receive support through a predetermined workflow engine. Atlassian Intelligence uses an LLMLarge Language Model: The interpretive component that allows AI products to comprehend and create responses in human language to determine the user’s intent and attribute that to an item within the workflow. While useful for controlling the possible output, this approach to responses also limits the overall capability to generate an "intelligent" response. Instead, responses are predetermined, reminiscent of a canned-response chatbot in this area. Because the query system works via Slack integration, people who are external to your business (like customers) are unable to make requests of it.

Praecipio's new offering, Praecipio Intelligence for Jira Service Management solves for both of these things with our Praecipio Intelligence Engine (PIEPraecipio Intelligence Engine: a proprietary application that trains large language models on a variety of data sources. Also, a delicious dessert.), capable of training a selection of supported LLMLarge Language Model: The interpretive component that allows AI products to comprehend and create responses in human languages on Atlassian Cloud data and business information from other sources. Praecipio Intelligence for JSMJira Service Management can then use all that data to automatically summarize relevant content and respond to service requests from your portal, using the full capability of Generative AI. This happens in near real-time and as if it were a human agent.

"Customers who file requests on your support portal can get immediate responses instead of waiting for an agent to sift through those PDFs and track it down."

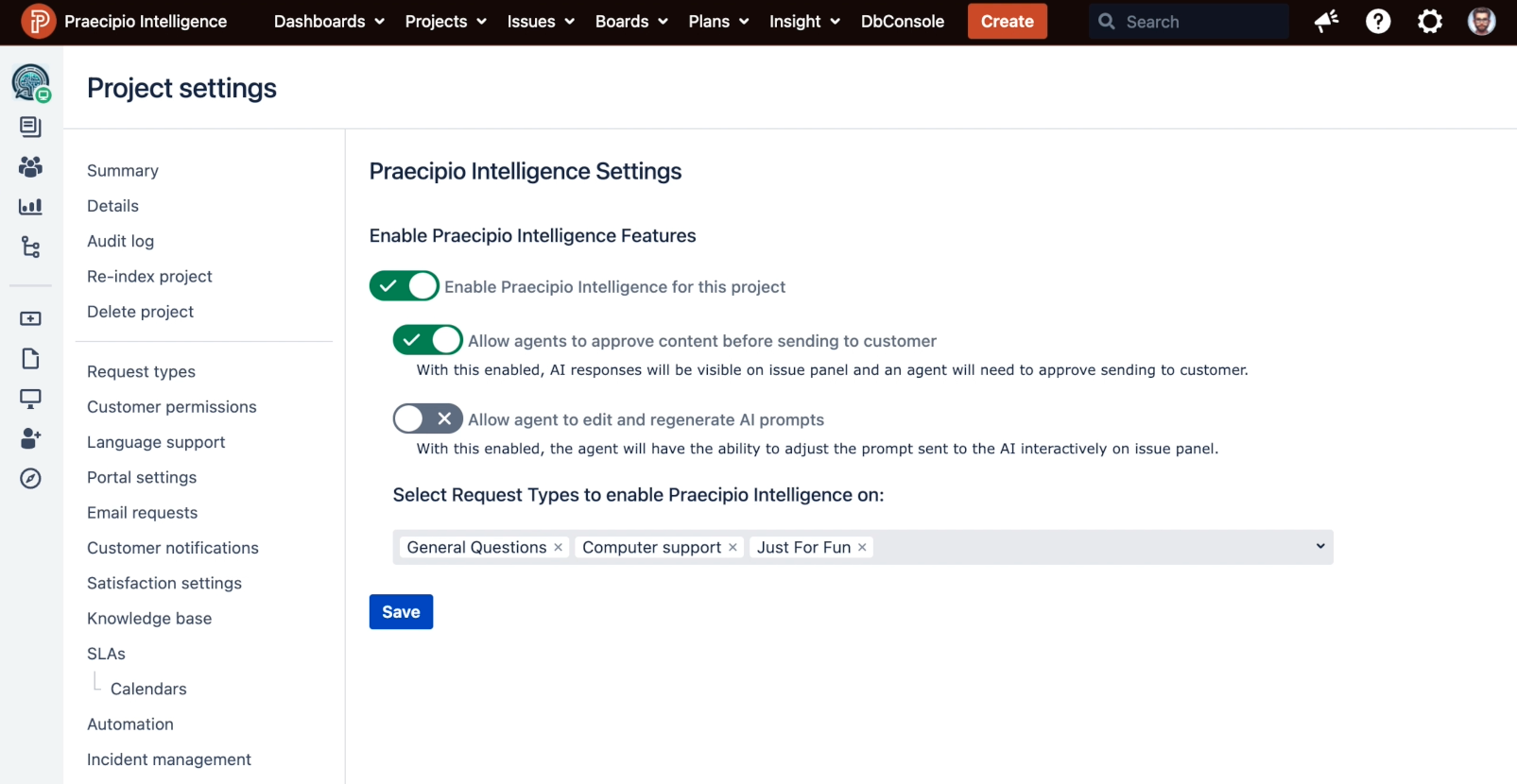

Imagine a scenario where manuals for your company's long-standing products all live as static PDF files or some other less-than-searchable media. As long as that data is in a readable format, the PIEPraecipio Intelligence Engine: a proprietary application that trains large language models on a variety of data sources. Also, a delicious dessert. can train an LLMLarge Language Model: The interpretive component that allows AI products to comprehend and create responses in human language to generate responses on it. From there, customers who file requests on your support portal can get immediate responses instead of waiting for an agent to sift through those PDFs and track it down. Settings exist to allow Praecipio Intelligence to act on its own and respond, or generate a response for service agent review before sending to a customer. The choice is yours, but the savings in time in either case will almost certainly improve time-to-first-response SLAService Level Agreement: an agreement between a service supplier and a customer on the elapsed time allowable to address or resolve a service request breaches and give customers what they need when they need it. You can learn more about Praecipio Intelligence for JSMJira Service Management and check out a demo here.

Getting Started with Artificial Intelligence

If you're using Atlassian Cloud Premium or Enterprise Products, the features of Atlassian Intelligence are already available. Ask your Administrator to enable them in the Atlassian Cloud Admin Panel. To leverage Praecipio Intelligence for JSMJira Service Management, talk to us for a consultation on the data we'll need to train on and the required parts (PS: this works on Data Center too!). Working together, Atlassian Intelligence and Praecipio Intelligence for JSMJira Service Management offer many genuinely helpful tools to empower your staff to complete work quickly across the organization and make customers happy. These are two of the best things that could come from any new technology adoption for businesses and definitely worth all the hype.

| Atlassian Intelligence | Praecipio Intelligence |

| Includes AI tools throughout many Atlassian products | Specific to Jira Service Management deployments |

| Users can ask questions about instance data via slack integration | Internal and external customers can create requests via JSM's portal and receive AI-generated responses |

| Leverages Open AI with access to data within your cloud instance | Can use a selection of LLMs and securely train on a variety of data sources including Atlassian, Snowflake, google drive, proprietary documentation, and more |

| Deployment via Atlassian Cloud |

|

| Helps users on Jira, Confluence, and Bitbucket to write content, search in natural language, find the status of issues, and more | Helps agents in Jira Service Management to address and resolve requests with AI-generated responses based on data in the context of your business |

| A 'virtual team-mate' for Atlassian Cloud products to generate content and move work | A virtual agent that supports your human agents with AI-generated responses |